What if your image editor could really understand you — not just respond to keywords, but actually follow what you mean, and remember what you’ve said? That’s what Flux.1 Kontext was built for.

More than just an AIGC tool, Kontext has true language understanding. It listens to you, executes step by step, and — most importantly — remembers your past instructions. That means no need to repeat yourself, no unpredictable results, and no starting over.

In short: Say what you want. The image follows — and it follows precisely.

Before Kontext, users often hit these common walls:

- ❌ “I just wanted to change the hairstyle, not the whole face.”

- ❌ “Removing watermarks ruins the whole background.”

- ❌ “My character looks different in every AI-generated frame.”

- ❌ “I don’t know Photoshop — I just want to change the text on an image.”

Let’s walk through how F.1 Kontext solves these with precision.

What is Flux.1 Kontext?

Flux.1 Kontext is a cutting-edge multimodal model developed by the independent AI research group FluxAI. Designed to understand natural language prompts and apply them to image editing with precision, Kontext enables smart local edits, stylistic transformations, and consistent character modeling — all in a single workflow.

Unlike traditional tools, Flux.1 Kontext doesn’t just execute commands — it understands them. Whether you’re replacing elements, refining portraits, or altering text in images while preserving layout and font, Kontext brings unprecedented control to visual content creation.

It has already gained traction on platforms like Hugging Face and GitHub’s ComfyUI plugin ecosystem.

FluxAI’s mission is to make advanced visual storytelling as intuitive as having a conversation. With Flux.1 Kontext, creators can now edit, stylize, and animate with minimal effort — all from a single prompt.

Real-World Use Cases (With No Design Skills Needed)

Here are just a few examples where Kontext goes way beyond typical AI tools:

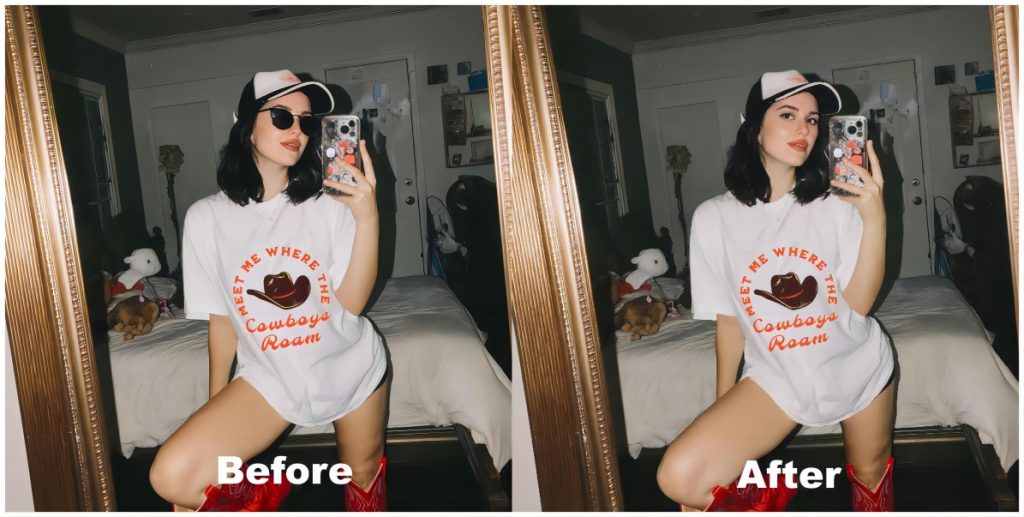

Precise Local Edits — Without Changing the Whole Image

💬 Real user need: “I just want to change the character’s hairstyle, not the whole picture. Why does it always regenerate everything?”

Most AI tools are all-or-nothing. Change a detail, and they redraw the entire scene.

With F.1 Kontext: You can surgically adjust specific elements like hairstyles, clothes, objects, or facial expressions — without altering the composition or breaking the visual flow.

Example use:

“Change her hairstyle to a ponytail, but keep her pose, face, and lighting the same.”

“Remove the sunglasses from the character.”

Result: The AI only edits what you told it to — and leaves the rest intact.

2. Replace or Delete Objects — Naturally

💬 Real user need: “Can I remove the extra person in the background? Or swap out this coffee cup?”

Most editing tools need careful manual selections, or guess wrong.

With F.1 Kontext: Just say what you want gone — or swapped — and it handles the rest.

Example use:

“Remove the extra person in the background.”

“Change the background behind the character to a vast blue ocean.”

“Remove watermark from image.”

Result: Clean, seamless replacements without ruining the background.

3. Keep Character Consistency Across Multiple Images

💬 Real user need: “Every time I generate a new pose, my character looks completely different. Can it just remember her face?”

AI often “forgets” your character with each generation — even if you want the same person.

With F.1 Kontext: The model remembers characters’ features — their face, hairstyle, expression — and carries them forward across different images, poses, and scenes.

Example use:

“Let the same girl now sit on the head of a sports car, cyberpunk style.”

Result: A consistent, coherent character across different contexts — essential for storytelling, branding, or multi-scene output.

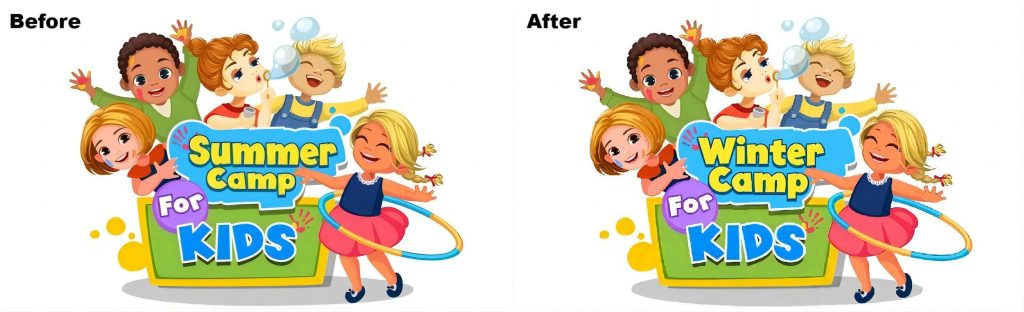

4. Directly Edit English Text Inside Images

💬 Real user need: “I want to update the promo image from ‘Winter Sale’ to ‘Spring Launch’ — but I don’t want to redo the design.”

Most tools can’t handle in-image text without breaking layout or style.

With F.1 Kontext: You can simply tell it what to change, and it updates only the text, keeping typography, layout, and styling intact.

Example use:

“Change the text from ‘Summer Camp’ to ‘Winter Camp’.”

Result: Same font, same style — updated message.

5. One-Tap Style Transfer — Without Breaking Composition

💬 Real user need: “I love switching styles, but why does it always mess up the face or layout?”

Style filters often distort key elements or shift composition unexpectedly.

With F.1 Kontext: You can apply a completely new style — pixel art, watercolor, anime, cyberpunk — and still retain structure, posture, facial identity, and layout.

Example use:

“See how the viral Labubu character can take on different styles — all with just a prompt!.”

Result: Structure stays — only the mood and style evolve.

What Makes Flux.1 Kontext Different?

- ✅ Understands full sentences, not just prompt keywords

- ✅ Executes edits step by step like a human assistant

- ✅ Remembers context across sessions — no more restarts

- ✅ No prompt engineering required — just speak naturally

Kontext isn’t just another generative AI — it’s a reliable creative partner that respects your intent and brings your ideas to life exactly as you imagined.

Final Thoughts: A Smarter, More Human Way to Create

F.1 Kontext isn’t just an image tool. It’s a communication interface between your ideas and visual execution.Whether you’re a creator, educator, marketer, or hobbyist — Kontext delivers power, control, and creativity like never before.

Get Ready to Create Smarter — With Even Less Effort

F.1 Kontext is already redefining how we interact with AI-powered image editing. And here’s the exciting part:

We’re about to launch Pixmancer-exclusive scene templates powered by F.1 Kontext — designed to make your creative process even easier.

Whether you want to tweak a hairstyle, change an outfit, or switch up the vibe, you’ll soon be able to do it with just one tap — no prompt writing required.

🙋 Frequently Asked Questions

Q1: Can I use multiple edits in one go?

A1: Yes! Kontext supports step-by-step commands — and it remembers what you changed before.

Q2: Is it limited to people and portraits?

A2: Not at all. It works for any image type — products, animals, scenes, and even text-based layouts.

Q3: Does it support non-English prompts?

A3: Currently optimized for English, but multi-language support is coming.

Written by the Pixmancer Team — Empowering creators with smarter AI tools.👉 Discover all Pixmancer features here

This looks cool, it understand users appropriately, a lot of work must have been done on the model.

love 的photos